Experimental Games

Intro to Experimental Games

Week 1

First week of Experimental Games, we talked about what makes a game experimental, and the various ways that can be experimental.

A game can be experimental through aesthetics, audio, mechanics, narrative, by applying game design development methods to non games and by using different types of peripherals/software/hardware.

For the aesthetics we looked into the game Okami (Clover Studio, 2006), the art style used in the game reminding Japanese traditional calligraphy strokes making it a very unique game in terms of visual experience.

In terms of audio we looking into Patapon (Pyramid, 2007), which mixes rhythm and strategy genres, the player controls a tribe though sound and rhythm while still having strategic control over the tribe.

In Monument Valley (Ustwo, 2014) we seen how they played with mechanics taking inspiration on the works of artist M.C. Escher (1898 - 1972), taking the architectural impossible and make it walkable, turning into a puzzle.

For narrative we watched the trailer for Returnal (Housemarque, 2021), from the trailer the narrative seemed cryptic and, to me personally, provoked a sense of confusion, suspense and intrigue, perhaps experimental in trying to evoke emotions in the player though storytelling.

We have also looked in the work of Gary James McQueen (2021), he used unreal and games development technology software to design garments and direct a fashion show showcasing his designs inside a game engine. I found it to be a very interesting way of using the technology, it shows how there are other applications that can be used as an alternative to resource consuming methods.

Lastly we seen a video preview of Johann Sebastian's Joust (GameSpot, 2012), purposely made to be played by a group of people, they used of Playstation's Move controllers to sense moving velocity, then had the players moving around a physical room holding the controller, if the players moved too fast they would be out of the game. It was a very interesting use of peripherals, moving games away from a screen and having the players interact with a game in a different way interests me as I tend to appreciate games as an interactive experience.

The lecture itself gave me great insight on the lengths we can take game development technology, not only to craft unique and fun experiences but how big the range of possibilities it holds for other applications.

Refereces

Clover Studio (2006) Okami [Video Game]. Capcom.

Housemarque (2021) Returnal [Video Game]. Sony Computer Entertainment.

GameSpot (2012) Johann Sebastian Joust Video Preview. Available at: https://youtu.be/QD5GnLpj7Lo (Accessed 13 February 2023).

Gary James McQueen (2021) GARY JAMES MCQUEEN "Guiding Light" . Available at: https://youtu.be/_7y0qbs71Ec (Accessed 13 February 2023).

Pyramid (2007) Patapon [Video Game]. Sony Computer Entertainment.

Ustwo (2014) Monument Valley [Video Game]. Ustwo Games.

Experimental Game Analysis

The Stanley Parable

The Stanley Parable (Galactic Cafe, 2013) is a first person adventure game where the player plays as Stanley, an office worker that discovers his co-workers have disappeared (The Stanley Parable: Ultra Deluxe, no date).

The experimental aspect of The Stanley Parable (TSP) is in the narrative structure, Alovisi argued the game being a "narrative sandbox experience"(2015). In TSP the narrator both describes the player actions as well as instructs the player, however the player has the choice to follow through with the narrator's instructions or not. The consequences to the choices being the branching multiple endings that can be experienced. The narrator is constantly interacting with the player commenting on their decisions all while playing with the player's power of choice, in some instances breaking the fourth wall.

TSP distinguishes itself by forming an unique and special relationship between the narrator and the player, the narrator himself can be identified as both a protagonist and antagonist, the way the narrator treats the player is more involved an emotional than what constitutes essentially a narrator which generally is not supposed to be obstructive by influencing the player's choice (Financial Times, 2022)

Another way the game defies what makes up a game is that there is no "winning", just multiple experiences of what Stanley goes through in his search for answers reaching different outcomes.

The Stanley Parable is uniquely interesting in the way it approaches narrative and the way it wants the player to interact with the game. To quote the official website "The Stanley Parable is a game that plays you." (The Stanley Parable: Ultra Deluxe, no date)

Refereces:

Alovisi, E., 2015. Beyond narration: The Stanley Parable as a narrative sandbox experience (Doctoral dissertation, Dissertation, UCL Institute of Education).

Financial Times (2022) ‘The Stanley Parable — gaming’s great postmodern experiment still inspires’, 24 May.

Galactic Cafe (2013) The Stanley Parable [Video game]. Galactic Cafe.

The Stanley Parable: Ultra Deluxe. https://www.stanleyparable.com/. Accessed 17 Feb. 2023.

Experimental Aesthetics

Week 2

During week 2 we covered Experimental Aesthetics.

We talked about Art Direction on how to define a game's identity through its visuals.

As for example we look at Paper Mario: Color Splash (published by Nintendo), an action-adventure role-playing game, where the main character and some other characters are portraited in paper cut-out versions of the original characters in a 3D environment. The environment itself is made to look like made out of paper, the game itself taking the "paper" element for visual aesthetics and using it as game mechanics.

We also looked into shaders, which manipulate render data and can be used to directly make a game look different by doing so.

Then we also talked about limitations as a way to develop aesthetics, by restricting certain visual aspects or applying rules during their development such as rules on colour uses, dimensions or resolutions.

I am not very artistic but having the art style dictating the core gameplay in more ways than just visually makes for interesting concepts.

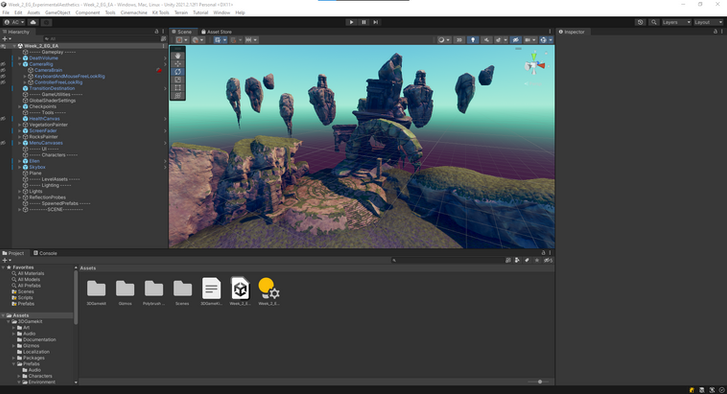

Unity Scene

The workshop task was to create an aesthetically pleasing or displeasing scene in Unity using assets from the Unity Asset Store.

I have always been fond of outdoor abandoned and or remote areas so I searched for an asset pack for those and settle for using the 3D Game Kit.

Then I wanted to add a shader to the camera to change the look of the scene. I looked into a few of such shaders in the asset story then I checked the Pixelation shadder.

Besides being a pixelation shader it had another type of shader, a "chunky" shader which rendered the scene in 4x4 pixel samples of black and white.

I found the results very interesting, it seemed to be using a similar effect as one of the games we had looked into for the lecture.

Instead of just keeping it black and white I used photoshop to make variations of the pixel samples and use different colour besides black and white.

With the dark blue and green being the variations I like the most.

Resourses

3D Game Kit

https://assetstore.unity.com/packages/templates/tutorials/3d-game-kit-115747

Pixelation

https://assetstore.unity.com/packages/vfx/shaders/fullscreen-camera-effects/pixelation-65554

Experimental Audio

Week 3

On the third week of Experiemental Games we approached the subject of Audio, how it affects the way players experience the game and its uses as a game mechanic.

Though musical direction we can set the tone or sonic identity of game environments, characters among others. Though the use of audio cues which are forms of feedback given to the player though audio. As well as using audio as input, taking audio data to control game mechanics.

We also talked how certain games audio direction influenced the industry and cultural resurgences.

The contents of the lecture had me wondering about the usage of audio in games in general, the forms it uses to produce spatial immersion as well how audio can be an influence for the different game mechanics.

Audio mechanic

provided scripts

In the workshop part of the lecture we were given a project with a 2D square can change size depending either on the loudness of an audio clip or the loudness of sound picked from a mic.

Then we were asked use that and give it a new function.

I decided to make the square change to different colours depending on the loudness of the mic input.

Colour from Loudness

I essentially copied the code of the ScaleFromMicrophoneBehaviour script that was provided, deleted the pieces of code I did not think I would need and started from there.

What I set my code to do was to grab the colour from the square's Sprite Renderer component in the Update method and change it to a colour set by the _color variable.

I got the loudness from the LoudnessDetectionMicrophone script, initially I was swapping the colour based on the value of this variable but since the values were too small to work with I made a new integer variable (lInt) that rounds the loudness values times 100, this gave me a better range of values to work with.

I then wrote a few if and else if statements that would grab this integer information and set the _color variable to another colour if the lInt variable was within the specified values.

Testing

Experimental Mechanics

Week 4

During Week 4 we discussed Experimental mechanics, looked into what makes games play "different". This being due to Interaction method, Perspective or Game Loop.

The first example being "Beat Saber" that brings rhythm into the VR platform, using the vr inputs as the interaction method with the rhythm game aspect.

Then we looked into "Among Us" which is unique in a way that it revolves around players interacting with each other and deception. It grabs the perspective from each player to create "confusion".

"Papers, Please" was also another game we looked into, it puts the player in the role of a border inspector in a "Dystopian Document Thriller".

Other example of games using experimental mechanics we looked into was "World of Goo" and "Mini Metro".

Game with experimental mechanic

I used the previous week's colour changing from microphone mechanic. Thought of making a maze like game where the player would need to match the colour of the door in order to progress.

I also thought about adding an enemy that would chase the player as to give a sense of urgency, but I did not implement it.

I modified the previous script, changed the way it processes the values and I also added a string that sets the colour.

I then made another script for the doors/barriers to check for the colour, if the colour matches the barriers would destroy.

Download link

Experimental Narrative

Week 5

For week 5 we discussed Experimental Narrative, the main concepts being Time & Order, Perspective & Wall Breaking then Abstraction & Emergent Narrative.

As example of the Time & Order we looked into the game "Twelve Minutes", the player is in a 12 minute time loop trying to piece together what is happening and trying to break the loop.

For Perspective & Wall Breaking we saw a clip from Metal Gear Solid on PSX of the encounter with Psycho Mantis, which reads your memory card information to get information of games the player has played and comment on them during the encounter thus breaking the 4th wall.

As for Abstraction & Emergent Narrative we seen a trailer from the game RimWorld, the game features an AI storyteller that produces stories and events throughout the game.

Other games we checked was "Her Story", a narrative puzzle game and "Not Tonight", a game similar to "Papers, Please" set in a dystopian Post-Brexit United Kingdom.

Yarn

This week's workshop task was use Yarn with Unity to create a Narrative.

I am poor when it comes to narrative so I just wanted to test and get familiar with the tool.

I played around with a 2D project then decided to try it in 3D with triggers to trigger text.

Test 1

Test 2

Applied Games

Week 9

During this week we talked about what are applied games, reality games and gamification.

Applied games being a category of games that serve a target purpose than just entertainment, these could be educational purposes for example. We looked into the game "Zoombinis" and watched a clip of a VR construction Simulation Clip for Scaffold Safety.

We talked about the concept of gamification, which is essentially applying elements commonly found in games into other areas.

Then we looked into what ARG's are and some terminology of this genre of games.

ARG stands for "Alternate Reality Game". It is a type of interactive storytelling experience that blurs the line between fiction and reality by incorporating elements of the real world into a fictional narrative, an example of this being "CICADA 3301".

Concept for an ARG or Applied Game

For this week's workshop task we formed groups to come up with a concept for an ARG or Applied Game.

I grouped up with my Najeh and we both thought up of an ARG using the University as a starting point.

The secrets of UWL

QR codes in university that will send the user to a blank webpage with a single word on it.

the user would check the source code of the page on a web browser such a google chrome.

On the source code the user will see a piece of trivia about a place or person important in the university (current or alumni).

Hidden within this trivia there would be an encrypted word (either numbers or letters)

The different encrypted keywords form another piece of trivia (fictional) about dark occurrences within university. however the encryption code changes every 30 days.

This would created a collection of dark stories potentially giving it a cult following.

To progress things further each of these fictional trivia stories would also have a secret code within.

The code would be actually the link for a website hosted in a private server (home server), this server would only be turned on for 5 minutes a day. The time of the day would be hidden within the piece of dark trivia.

Within the website there would be fabricated dark imagery to support the dark trivia.

AAAA

Week 10

● aaa

aaa

Mod for an existing game

This week's workshop task was to come up with a mod for an existing game.

One of my favourite games is Cyberpunk 2077, when thinking of mods for that game I would like to do something to increase the "quality of life" in the game. More specifically the random NPC's that the player can see roaming around.

In the game Watch Dogs: Legion there is an interesting concept that you can play as any NPC in the game. The NPC's have generated routines, backstories, "family members" and there is even a value about how much that NPC feels towards the player's organisation. I thought that this feature adds to the game and makes NPC's more interesting than just walking pixels on the screen.

My mod idea to make unnamed NPC's more interesting and reactive to the player.

Starting by giving them some sort of perceived routine, and more variation to NPC reactions towards the player's actions instead of just duck and run when a shooting happens for example.

Also having some time based events such as sale events on bazaar areas that happen once every x days at x time of the day.

Other events could be "rush hour" events where the traffic in the city is specially bad at certain periods of the day, and "weekend days" entertainment areas of the city are much more populated during certain periods of time.

Even events like in Gran Theft Auto when the paramedics tend to injured pedestrians, sometimes successfully "reviving" them.

AAAA

Week aa

● aaa

aaa

Artefact 1

Asylum Escape

Group Project Members:

Angela

Najeh

Entry 1

When I started thinking about a project I wanted to play with immersion while wanting to have a symbiotic relationship between the player and the protagonist character in 1st person perspective.

Upon grouping up the idea evolved into having an "insane" protagonist that sees and hears things that are not there.

There would be 2 versions of the playable area, one as seen in a normal state and another aesthetically different, a scary version of the normal area.

Essentially I wanted to play around with giving an immersive experience though sound and visual events in VR.

Even though it is an interesting thing I would like to explore something a bit more experimental than that.

So I came up with an idea that instead of the player taking full control of the protagonist, the player is the voices inside the protagonist's head.

Voice as Input is nothing new but there is always inventive ways to use it.

In this case changing completely the way the player interacts with the game.

To avoid issues of motion sickens we turned away from the VR medium, and with the game being voice controlled the "physical" interaction the player would have with the game would be minimal, the player is more of an observer than being the controller in this type. It would make more sense to experience the game on a screen.

While at the start we had a whole area in mind, possibly 2 floors of an hospital as walkable area, given the time limitations and possible complications with voice commands we decided it would make more sense to make a type of escape room environment. With the protagonist being stuck in a room relying on the voice commands/suggestions of the player to escape the room.

Unity Version 2021.2.12f1

Core Ideas:

-

3D

-

1st Person POV

-

Escape Room type

-

Voice Command

-

Asylum

-

Protagonist sees and hears things that are not there

-

Player is the voices the protagonist hears

-

Symbiotic Relationship between the protagonist and the player

Platform:

Windows

Inspiration:

Phasmophobia (2020)

Entry 2

Voice Controller

I found 2 ways of doing a voice controller:

-

By using Language Understanding (LUIS), a could-based conversational AI service (aahill, 2022).

-

Or by using Windows Speech Recognition, which listens for specific keywords and upon recognising them can invoke a function (thetuvix, 2021).

While LUIS handles more complex voice input it relies on a cloud based service which has limited interactions for free users on top of being free for only a period of time.

In light of that I opted to use Windows Speech.

Since It was my first time doing a 1st Person type of game I started by looking on how to make a First Person Controller in Unity, so I followed an online tutorial on youtube by Brackeys (2019).

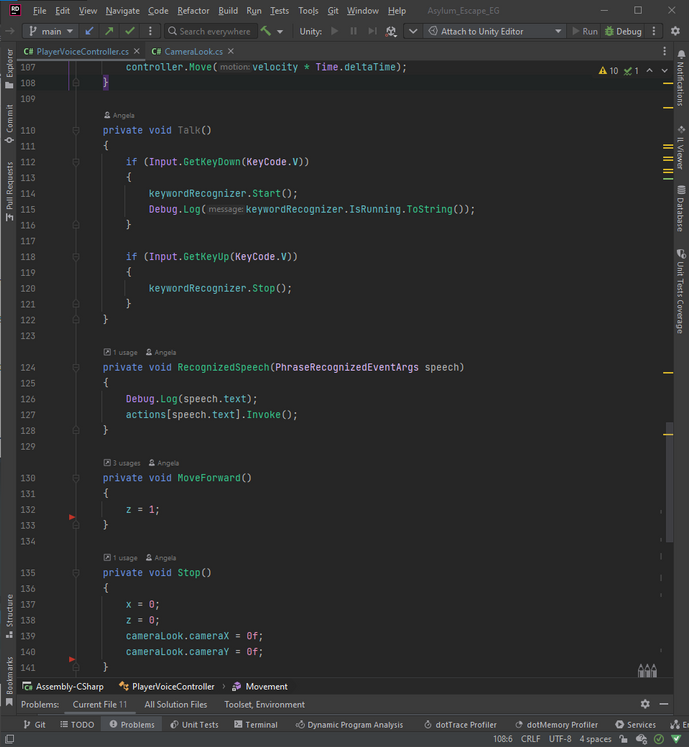

Then after getting a sense on how the player controller worked I looked at examples of how to use the speech recognition (Dapper Dino, 2018; Jason Weimann, 2017) and made my own voice command player controller.

It still requires some fine tuning and other more specific keywords but so far it can be used to look around and walk forward.

Voice Controller test

Voice Controller scripts

Entry 3

Project whiteboard

To organise ourselves better we kept an online whiteboard with information regarding the project.

This includes the puzzle flow, an asset list, and commands list.

The contents were to be updated as we kept working on the game.

Entry 4

Peer Review

At the time of the peer review we did not have much to show, we had a graybox with working movement controls and limited interaction.

The main things raised on the feedback were related to user experience, given the nature of the game it would require to keep in mind how to help the player interact with the game.

Entry 5

Objects Interaction

The object interaction I found a bit challenging to make on my own, as it being the first time I was doing something more complex than I have worked on so far.

I made a simple inventory system that takes string values for object names. During planning we agreed first hand on the list of objects and key items so I scripted the interaction by taking the string values.

Each of the interactables had different behaviours so I coded the specific behaviour per interactable.

The main interaction keywords was the "Search", "Pick up" and "Use <keyitem>".

When the user would call the search method first the script would check if the interactibleBehaviour script was null, if it was not null then it would check if the name of the string value matched the the specific value intended. If it matched then it would trigger a method in another script on the interactible game object.

The way I set the range of these interactibles was by having a trigger box on them and by utilising the tags, on trigger enter it would get the component interatiblebehaviour script and on trigger exit it would make it null.

For the Pick Up I used a similar method to add the appropriate string value from a script in the key items when picking up.

When using items the player would have to specify which keyitem they were trying to use on the interactibles, I used different if statements to accommodate the various situations the player could attempt to use a key item. Such as not being in range of a valid interatible, not having the key item, trying use the item on an interatible that they are not meant to and finally when using it on the correct interactible.

On meeting the correct criteria it would then trigger the method in the specific interatible script.

What I should have done was written another script to process interaction rather than keep writing on the PlayerVoiceController script.

Scripts, updated PlayerVoiceController and item scripts

Entry 6

UX/UI

Menu and Intro

After having a working level, I shift focus to the UI and User Experience.

On Designing for Interaction module we discussed User Experience and how to apply it to our game.

I made a basic Main Menu with 3 buttons, "Start", "How To Play" and "Quit".

The Start button load the game scene.

The How to Play button would make active an how to play UI game object with instructions and other details important to know so the player would be able to understand the UI and how to play the game.

The Quit button would exit the game application.

I also made a voice commands script for the UI in order for the game to be able to be played fully voiced.

Taking inspiration from Phasmophobia I made a 3 part intro scene starting with the game name, a disclaimer and finished with microphone requirements as well as recommending to play using headphones.

I made a fading animation to ease the player into the next intro section.

HUD elements

In the HUD there are 3 main elements, firstly the Journal then the microphone indicator and a responses text box.

Mic Indicator

The microphone indicator has 2 functions, to show the player that is picking up sound and also to inform the player when a keyword has been recognised.

On a recognised keyword a green checkmark shows up underneath the microphone icon, this check mark was coded to be visible for just a few seconds before disappearing.

I reutilised the colour from loudness script I and the microphone loudness script from Week 3 workshop to make the input meter, instead of changing colour it would make the white bars visible or invisible depending on the loudness levels.

Responses Text Box

Since the player is NOT the protagonist of the game, it was important to make the protagonist interact with the player in some manner. Initially I wanted to have voice responses on top of text responses, but since it is a prototype I focused on getting appropriate responses, in the future would be easier to just record the responses if these were already set in stone.

The response text box was composed of 2 elements, UI text and a black textbox with some opacity, the text would be set trough code as a string value then set to null after a period of time, the black textbox would become active when a new string value was sent to the text then become inactive when this value returned to null.

Journal

In game the player has access to a journal, they can either click the corner of the screen where part of the journal can be seen or say "Open Journal" to bring it up, I made an animation to bring it to the front from the corner once the open journal method is called.

In the Journal the player can see all the necessary commands they can use to play the game.

there is a section of the Journal with a few lines to indicate the plot of the game, the protagonist not know where they are and taking note of the voices they can hear.

Also in the Journal I added a visualisation of the player's inventory. I made item slots which would become active with the name of the key items when these were picked up. This way even if the player did not know the name of the item they had picked up, they could check the journal to find the key item's name.

Since the constant voices in game could have been disturbing to some players I added buttons to turn them on or off in the journal.

UI/HUD screenshots

Scripts

Entry 7

Some sound and wrap up

Sound wise I added some sounds when picking up items and interaction sounds, I also tried to adjust the sound levels to match the ambient.

Though this project I mainly focused on doing the gameplay programming and most of the programming tasks while Najeh was more focused on level design, assets and most artistic aspects.

Submission

Resources:

Microphone image: https://www.iconpacks.net/free-icon/microphone-342.html

Headphones image: https://www.iconpacks.net/free-icon/headphone-1402.html

Title font: https://www.dafont.com/old-london.font

Sound effects: https://pixabay.com/sound-effects/

References:

aahill (2022) Language Understanding (LUIS) Overview - Azure. Available at: https://learn.microsoft.com/en-us/azure/cognitive-services/luis/what-is-luis (Accessed: 25 February 2023).

Brackeys (2019) FIRST PERSON MOVEMENT in Unity - FPS Controller. Available at: https://www.youtube.com/watch?v=_QajrabyTJc (Accessed: 25 February 2023).

Dapper Dino (2018) How to Add Voice Recognition to Your Game - Unity Tutorial. Available at: https://www.youtube.com/watch?v=29vyEOgsW8s Accessed: 25 February 2023).

Jason Weimann (2017) HowTo Use Voice Control / Commands in your Unity3D Game. Available at: https://www.youtube.com/watch?v=Xau3hFEcn0U (Accessed: 25 February 2023).

thetuvix (2021) Voice input in Unity - Mixed Reality. Available at: https://learn.microsoft.com/en-us/windows/mixed-reality/develop/unity/voice-input-in-unity (Accessed: 25 February 2023).

Phasmophobia (2020). Microsoft Windows [Game]. Kinetic Games, United Kingdom.

Artefact 2

Ideas for Assessment 2

rough ideas

Idea 1

vr experience with spatial audio

Idea 2

treasure hunt type of game that is real time based, players would have to search for the answers in person outside of the game application. Answer to riddles would be in specific places where a QR code would be "hidden", answers could also come from specific people who are frequently at the university

Idea 3

audio based stealth system. 3D game where everything makes noise, movement, bumping into objects (noise dependent on move speed), player character breathing. One enemy character that would follow noise sources depending on loudness and distance.

Idea 4

VR game that uses hand tracking

building game with tools (hammer, nails, saw)

Idea 5

VR game that uses hand tracking

infinite runner

player needs to cut, move and/or dodge objects coming their way

Entry 1

As far as the ideas went I decided to still make the project a VR game for the Meta Quest 2 platform.

I have looked into gameplay of various VR games to take in consideration what type of interactions and games are available.

Then I proceeded to actually start the project in Unity. Ideally after having the setup done I could test the capabilities of both controller and hand tracking.

Setup

During the setup research I found 2 XR interaction methods I could use.

The Open XR and the Oculus Integration.

I setup projects using both methods before deciding which I felt worked best for me.

As it is a bit of a new area of work for me I felt that just the setup process took relatively long, as well as testing the features each setup toolkit could offer before choosing one.

While the Open XR offers more compatibility for other platforms the Oculus Integration initially felt more natural to use outside of the box.

PS: It was not, they should really work more on their documentation for Oculus Integration.

Entry 2

Rather than doing a "game" I thought of using the opportunity to explore and test capabilities of hand-tracking as an interaction medium.

Object slicing

Perhaps one of the most satisfying interactions that can be done in VR is cutting objects in half with some type of sword.

I set out try and do just that, but instead of having the sword a child object of the hand/controller it being a grabbable tool which could be grabbed when using either hand tracking or controllers.

With the help of a tutorial by Valem and using EzySlice I was able to make a slicer saber that is able to slice primitives in half.

Entry 3

aaa

Object Spawner

aaaa

Entry 4

Aaa

Peer Review

aaaaaa

Entry 5

Aaa

UI

aaaaaa

Entry 6

Aaa

AA

aaaaaa

Submission